Last summer, Elon Musk’s X (formerly Twitter) promised enhanced brand safety tools for advertisers that would allow brands to keep their ads from appearing near hateful content. However, several companies and organizations have recently spoken out, some threatening to stop advertising, after their ads appeared next to pro-Hitler, neo-Nazi, and antisemitic content.

In August, X CEO Linda Yaccarino, speaking from New York in a live audio event on X, said, “Think about it in terms of brand safety: I wrap a security blanket around you, my brand, my chief marketing officer, and say your ads will only air next to content that is appropriate for you, of your choice,” the Wall Street Journal reported. And Yaccarino assured advertisers in an August post on X how newly announced controls for advertisers “completely reinforces our commitment to brand safety.”

But last week, a spokesperson for A.T. Still University messaged me about its ad on Elon Musk’s X, appearing adjacent to a post of a video glorifying Adolf Hitler. The blue-check account that posted the video, “EuroHighlander,” is dedicated to posting Nazi propaganda and antisemitic content. ATSU, the country’s oldest school of osteopathic medicine, messaged:

"ATSU was unaware of X’s placement and will seriously consider withdrawing advertising on the site. ATSU unequivocally condemns antisemitism, all forms of hate speech, and discrimination."

Today, an ad on X for Michelin News, featuring Yves Chapot, General Manager and CFO of Michelin, appeared on the account that posted the Hitler video. A spokesperson from Michelin messaged me today: "We were profoundly surprised and shocked to discover our video on an account whose views we vehemently condemn, as they are in direct opposition to all of Michelin Group's values. We have, of course, taken immediate action with the X network. We are committed to continually improving our processes to prevent such incidents from happening again in the future." The ad appeared adjacent to a post from the blue-check account, saying that X had blocked the Hitler video post in Germany where Nazi glorification content is illegal.

The European Union announced in December it was launching a formal investigation into X for alleged breaches of the EU’s Digital Service Act. The user posted the screenshot of the notification from X with a nose emoticon, presumably an antisemitic trope about Jewish noses, and with this quote tweet: “Things that can happen over here in the not-so free 𝘑𝐄𝘞𝐔.”

Earlier this month, a spokesperson for Edmunds, a division of CarMax (NYSE: KMX), messaged me, “Like all other X advertisers, Edmunds does not control when or where the ads may appear to users as they use the platform.” Edmunds’ ad had appeared next to a post by Stew Peters, an X “influencer” whose account is devoted to posting and monetizing anti-vaccine and antisemitic content.

Peters posted to his more than half-million followers on X in January: “BREAKING: Quicken Loans also gives interest-free loans to Jews but not goyim.” His post included a screenshot of a 2018 Times of Israel story featuring Dan Gilbert, co-founder of Rocket Mortgage and owner of the NBA Cleveland Cavaliers.

A spokesperson for Rocket Mortgage (NYSE: RKT), parent company of Quicken Loans, said in a statement to me that Peters’ claim was “grossly misinformed,” adding that “mortgage approval, along with the interest rates and fees on a loan, are based on a homebuyer’s credit, income and assets — their religious affiliation is never a factor.”

An ad for nonprofit The Institute for Humane Studies at George Mason University also appeared on X adjacent to Peters’ post about Quicken Loans.

A spokesperson for the IHS messaged me: “It’s important to note that we do not have direct control over where X positions our advertisements when using these broad parameters. However, upon encountering situations like this where our ads appear alongside content that contradicts our organization’s values and principles, we take immediate action. In this specific case, we have implemented measures to prevent our advertisements from being associated with this user in the future.”

In December, the CEO of tastytrade, a fintech company owned by the UK’s IG Group (LON:IGG), told me about the company’s ad on X appearing next to an antisemitic, racist, misogynistic post by a blue-check account: “In no uncertain terms unless we’re able to control where are our ads are being placed I’m not interested. It’s vile, disgusting, and I won’t support it.”

And last week, an ad for Data Storage Corporation (NASDAQ: DTST) appeared adjacent to the post by the account featuring the video glorifying Hitler – with a link to antisemitic neo-Nazi film, “Europa: The Last Battle,” which blames Jews for World War II. No one from DTST has replied to inquiries.

X Business announced in a January blog post, “Expanded Brand Safety Controls for Advertisers,” saying X was expanding access to its “successful vetted inventory pilot with Integral Ad Science” for video posts.

The account and its Hitler video post remain intact on X in the U.S., as of this writing. No one from X or Integral Ad Science (NASDAQ: IAS) has responded to inquiries about the Hitler video post and ads adjacent to it.

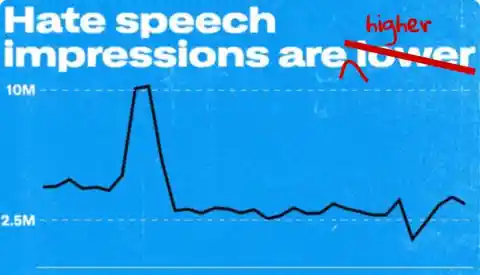

Hate speech on X goes largely unchecked, protected by Musk’s commitment to free speech. “Racism and antisemitism have zero resistance on X.” So said entrepreneur Mark Cuban, a stakeholder of the NBA Dallas Mavericks, in a statement to the Washington Examiner in February. What’s more, X may be incentivizing content creators to post hate speech.

In November, Media Matters reported that a pro-Hitler and Holocaust-denier account was paid $3,000 in ad revenue sharing, according to a receipt posted by the account. X’s ad revenue sharing program offers payments to “creator” accounts, including those that post racist and antisemitic content. Some advertisers have said they don’t control where their ads appear on X, so their ad payments may be funding such accounts.

Other large corporations were first to voice concerns about brand safety and advertising on X last year.

“‘For many communities, [Musk’s] willingness to leverage success and personal financial resources to further an agenda under the guise of freedom of speech is perpetuating racism resulting [in] direct threats to their communities and a potential for brand safety compromise we should all be concerned about,’ wrote McDonald’s chief marketing and customer experience officer, Tariq Hassan,” as Semafor reported in April.

In May, ice cream giant Ben & Jerry’s announced it was ending paid advertising on then-Twitter due to the proliferation of hate speech on the platform. Ben & Jerry’s, a division of Unilever (NYSE: UL) said in a statement:

“We’ve watched with great concern the developments at Twitter following Elon Musk’s purchase of the social media platform. Hate speech is up dramatically while content moderation has become all but non-existent. In addition to the changes on the platform that have led to an increase in hate speech, Musk himself has doubled down on dangerous anti-democratic lies and white nationalist hate speech. The platform has become a threatening and even dangerous space for people from so many backgrounds, including people who are Black, Brown, trans, gay, women, people with disabilities, Jewish, Muslim and the list goes on. This is unconscionable in addition to being plain bad business.”

And bad business choices matter for public corporations. Members of boards of directors of publicly traded corporations have a fiduciary duty to shareholders to protect a company’s reputation from harm. A company’s advertising choices and brand associations impact its reputation.

“As a company, reputation is your currency. Never take that for granted,” as Alexandra Clark, Shopify’s VP of communications and public affairs, posted on LinkedIn last month. Ironically, Shopify (NYSE: SHOP) recently announced a partnership with X, while several other companies have sought to distance themselves from Musk’s platform, to protect their reputations from associated brand risk. I posted an inquiry to Shopify CEO Tobi Lutke. In response, he blocked me.

Musk, meanwhile, has deflected responsibility, blaming others for the advertiser exodus away from X. In September, Musk blamed the Jewish ADL (Anti-Defamation League), threatening to sue the nonprofit for lost revenues from advertisers, claiming the ADL pressured advertisers. Musk posted: “Our US advertising revenue is still down by 60%, primarily due to pressure on advertisers by @ADL (that’s what advertisers tell us), so they almost succeeded in killing X/Twitter!”

However, Ben & Jerry’s said in a message to me on September 15, “We were not contacted by the ADL on this issue, and they had nothing to do with our decision. Our statement lays out the reasons we left the platform as an advertiser.” Which advertisers told X they were pressured by the ADL, as Musk claimed, is unknown.

Musk has also attempted to blame researchers and journalists for the advertiser exodus from X.

In July, Musk sued nonprofit The Center for Countering Digital Hate for its research showing a proliferation of disinformation and hate speech on the platform. The CCDH is represented by attorney Roberta Kaplan at Kaplan Hecker & Fink LLP, who recently won a $83.3 million dollar in damages against Donald Trump on behalf of her client E. Jean Carroll.

Kaplan responded to Musk’s attorney’s complaint, saying, “Simply put, there is no bona fide legal grievance here. Your effort to wield that threat anyway, on a law firm’s letterhead, is a transparent attempt to silence honest criticism. Obviously, such conduct could hardly be more inconsistent with the commitment to free speech purportedly held by Twitter’s current leadership.”

More advertisers fled X in November, after Musk endorsed an antisemitic conspiracy theory. Forbes reported:

“Musk endorsed an explicitly antisemitic conspiracy theory, and a report from watchdog Media Matters found that ads from major companies including IBM and Amazon had been placed next to content promoting Nazis and white nationalism, prompting advertisers including Apple, Disney and IBM to pull ads from the platform.”

Musk posted on X, promising to file “a thermonuclear lawsuit against Media Matters.” Judd Legum, publisher of Popular Info posted on X, saying Musk’s “statement confirms all of Media Matters reporting. He admits major brand advertisers were displayed alongside racist content. The defense is that there were many other ads that were not displayed alongside racist content. Very strange argument overall.”

Executives at X reporting to Yaccarino have mostly dodged questions about antisemitism on the platform. On September 14, Yaccarino announced executive hires in a post. New senior talent included Monique Pintarelli, Head of the Americas, Carrie Stimmel, Global Agency Leader, and Brett Weitz, Head of Content, Talent and Brand Sales. In response to my inquiries to them about antisemitism on X, Pintarelli and Weitz blocked me.

Stimmel apparently left X quietly at the end of 2023, as I discovered from her LinkedIn profile. She had expressed concern for the safety of her young son during their train travel, in an X post in October. She wrote that she “pulled him close and whispered that I needed him not to say he's Jewish out loud on that train.”

Earlier this month, I posted an inquiry to Weitz, asking about ads next to the Hitler video and the Europa film post. Weitz’s LinkedIn profile says he’s “passionate and committed to diversity, equity, and inclusion celebrating all cultures and diverse stories and voices.” Musk has said “DEI must DIE” and called diversity initiatives “discrimination” against white people.

Last week, Musk offered to cover legal expenses for those “discriminated against by Disney or its subsidiaries (ABC, ESPN, Marvel, etc).” In his post on X, Musk cited a graphic of the entertainment giant’s diversity and inclusion policies. Musk has taken particular aim at Disney, which was among several large brands, including Apple and IBM, that stopped advertising on Musk’s X in November after he endorsed an antisemitic post. It wasn’t the first time Musk had offended the Jewish community.

In an interview with CNBC last May, Musk was asked about his tweets attacking Jewish philanthropist George Soros, a favorite target of antisemites. He doubled down, saying, “I’ll say what I want, and if the consequence of that is losing money, so be it.” Musk’s choices have consequences. X may be losing money – 72 percent of its value, down from when he bought it for $44 billion, according to Fidelity’s filing in January.

Musk keeps saying what he wants to say, seemingly without regard to consequence. In November, Musk topped off his message to X advertisers who had left the platform due to hate speech and antisemitism. In a live event hosted by Andrew Ross Sorkin for The New York Times DealBook, Musk told advertisers “Go fuck yourself.”

But Yaccarino and Musk are now working to lure advertisers back to X, attempting to repair some damage done by Musk’s previous messages. In January, Musk visited Auschwitz for a well publicized tour. But journalist Elad Nehorai posted on X: “As Elon Musk visits Auschwitz in order to use dead Jews to cover up his bigotry, a thread on his antisemitism.”

Last month, X announced it would launch a 100-person content moderation center in Austin, Texas, to focus primarily on child sexual exploitation prevention. Joe Benarroch, X head of business operations “said that the team will also help with other moderation enforcement, such as those forbidding hate speech,” Bloomberg reported. In response to my inquiry to Benarroch months ago about the proliferation of antisemitism on X, he blocked me.

Earlier this month, Yaccarino sent a letter to current and former advertisers to “come back to X,” as Bloomberg reported, telling them what X is doing to keep kids safe on the platform. But as long as antisemitic, Nazi content is protected by X’s free speech commitment, advertisers will need to make their own decisions about brand safety.

No one from X or Integral Ad Science has responded to inquiries. X executives Joe Benarroch, Brett Weitz, and Monique Pintarelli blocked me after I posted inquiries to them on X.